- Image by Atlassian via Flickr

Disclaimer and Shameless plug: I work for an awesome company in Australia, Atlassian, that sells a wiki. Confluence can be purchased for as little $10 for 10 users. The proceeds from your $10 will be donated to charity.

A couple of months ago, I put up the post, CAST 2010: Software Testing the Wiki Way together with my Prezi to advertise my presentation at the Association for Software Testing’s conference in Grand Rapids, Michigan. This post will explain how I use a wiki to test and includes the paper that I wrote for CAST here.

My plans were grand. I was going to run a Weekend Testing session to show people how to use a wiki to manage their tests. I would be educating people about testing with wikis and advertising for WT at the same time! FTW^2!!

Well folks, CAST doesn’t really work that way. At CAST, a third of every session is reserved for a facilitated discussion. When I say facilitated, I mean that there is a system for asking questions and maintaining a discussion thread or killing it if the discussion is not constructive. I’ll be honest: I did not know that there was extra time for questions or that the entire session would be facilitated in a certain way. Once I attended the conference opener, I realized that my Grand Plan did not belong in Grand Rapids and started to feel as though I might be, very much, in over my head.

What you didn’t know about my wiki talk: It’s been extremely challenging to put together. I think I’ve figured out why. Wikis are about using pages, links and writing to set down thought patterns visible to others and to allow alteration by others for collaboration. It’s hard to de-couple showing the way my thought process works, from the actual nuts and bolts of putting wiki pages together for software testing to show to others. The way that I manage tests through a wiki will not be the same way Chris McMahon or Matt Heusser manages tests through the wiki. At the same time, the fact that we would all do this differently is the point of using a wiki in the first place. Using a wiki, the three of us could do testing on the same project in different ways or collaborate on some of the same testing, using a wiki to communicate what we’ve done. Although I will only show one way to organize tests in a wiki, you can do testing in plenty of other ways on the same project and link them all together.

Sanity Testing

We do bi-weekly milestone releases for Confluence. Whenever we have one of these releases, we do a set of sanity tests just to check that the basic functionality is still ok. Our checklist lives on a wiki page. As I go through the list, I make a set of notes on a page. If I raise an issue, I’ll post a link to that issue in my notes. I use the same page for every sanity test I do. What I’ve ended up with is a page whose changes I can compare over time and different releases.

Spec Review

Recently, I’ve been taking every opportunity I can to do testing at the beginning of the software process rather than the end. I’ve been looking at specifications when they are mostly but not completely finished. I’ll either leave a comment on the page with a few questions about the spec, or I’ll make a page of a few test objectives. Writing down a few high level test objectives has proven very helpful to product managers and developers as it gives them edgecases to think about when they are designing features or writing code. These high level test objectives can be reused later for system testing.

System Testing

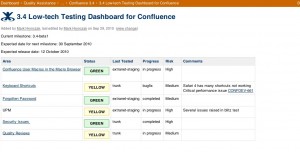

Above is a screenshot of a low-tech testing dashboard. These are not a new concept. I see them mentioned online every now and then. The template I started with is James Bach’s although the test team I work with has altered it somewhat.

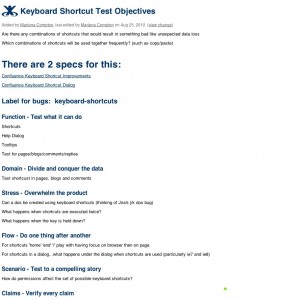

You’ll notice that we’ve got a few features listed. Each feature is linked to a page with test objectives. Here is an example of a page with test objectives.

Confluence has a way to let users build templates for pages, so when I make test objectives, I use our template. This could be replaced with any set of heuristics you want to use. It’s highly likely I’ll be changing this up for my next set of tests.

Since we are now down at the feature level, I will add pages to this for the testing that I do. This is where it gets messy, but that’s ok. What I’ve noticed is that I don’t have much structure past this point. I will either link the test objectives to a page or I will just add a page if I’m session-based testing and write stuff as I go along.

That’s all I do…really. I feel privileged that I’m not asked to come up with tons of documentation or to follow an involved process for documenting the testing. The way I see it, using the wiki to do testing embodies the Agile Manifesto‘s “working software over comprehensive documentation.” The documentation looks incomplete because I don’t write everything down. I’m too busy testing.

There are some situations where using a wiki will be challenging. It can be difficult at first for people to be comfortable enough with the openness to edit pages or add comments. If you need to aggregate huge amounts of meta-data from your tests, wikis are not there yet. If you have hundreds of tests trapped in a knowledge based tool, I don’t know how to help you extract those into a wiki.

It is possible to do test automation through a wiki with tools like FitNesse, Selenesse. I haven’t done this, but I know others, including Matt and Chris have extensive experience with using a wiki in conjunction with automated tests.

At my CAST presentation, I went through my prezi which I rehearsed lots in my hotel room. I then logged into an example wiki with a testing dashboard and some tests I had written. I was a little frustrated that I didn’t have more to show off, but that’s the point of a wiki. You end up with less but what you do have on the page means more. I also know why Matt was so excited for me to give a presentation about this. I was worried that I didn’t have excessive numbers of pages to show off or highly structured documentation, but this is exactly why wikis are so useful. Wikis cut through the shit.

After I finished showing my examples, Matt showed off how he does testing through his Socialtext wiki. I was also very excited that the Dave Liebreich got up to show off his wiki as well. I’ve followed him since he was blogging at Mozilla, and was already super-stoked to meet him, let alone have him attend my session and show off his wiki.

Thanks to Matt Heusser for encouraging me to submit this as a topic. Since I work in a wiki all-day, every day, it’s easy for me to forget how lucky I am and how different this is. I don’t have to worry about which shared drive has the right version of the requirements. I’m no longer chained to a testing tool someone else has told me I must use. I get to arrange my testing in a way that suits my brain. I wish every tester had this opportunity and I hope that what I’ve written helps someone else get started with using a wiki for software testing.

BTW, Trish Khoo just wrote about wiki testing on her blog as well.

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=41f1664d-b55f-43c9-9229-d57fae8e64f0)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=ea549488-64ee-4cd3-bc1d-41c3d68dd0c2)