Previous to my attendance at PNSQC, I had a couple of “Bach moments.” PNSQC was no exception. I took his class and believe I was able to distinguish myself as thoroughly mediocre. Is it just me or does that man have “laser focus” when someone says something that interests him? This changed dramatically, however, once I hit the first poster session.

I knew people would look at my poster because it had pictures on it, but I wasn’t quite prepared for the volume of interest. It started right away. As the last pushpin was going into place, I heard someone behind me say, “um…where’s the quality.” I turned around to see a) baseball cap b) glasses c) beard.

OMG…JAMES BACH!!!!!!!

“Excuse me, but I’m looking and I don’t see any quality here.” He glanced all around my poster from the top left corner to the bottom right corner. “Nope, still no quality.”

Meanwhile, I’m still processing who the hell this is. Love him or hate him, you have to admit that he sits among the “Monsters O’ Test.” As he keeps drilling me, I notice more people showing up behind him. When Monsters O’ Test speak, people listen. I felt like Peter Billingsly, frozen on Santa’s lap in The Christmas Story. The crowd turned into a gang of elves. I know that Mr. Bach was asking me about software quality, but really, all I heard was, “HO! HO! HO!” I think he realized that I was completely petrified, because he kind of backed off. My reviewer had made his way over to my poster and mentioned that Mr. Bach should read my paper.

I honestly think I started babbling, because I don’t remember a word I said. There are a few things I know about myself including the fact that I have a complete inability to handle meeting people I respect. My fangrrl tendencies are directly proportional to how much I respect someone. This is because my respect can only be earned. (Hey, Atlas Shrugged fans, y’all know what I mean.) I never respect someone just because I hear they are “important.” The flip side is that people I respect mean a lot to me. As an example, I am such a fangrrl of the book How We Test Software at Microsoft since I read it last winter. I learned so much about testing from this book, and what I learned, I apply every day. Last summer, Alan Page posted that he likes my blog and was looking forward to seeing me at PNSQC. The post showed up in my reader, and I swear I nearly had a heart attack. It was so flattering and unexpected. I’ve now met Alan (Hiiiiii!), and have managed to calm my fangrrl tendencies somewhat. This is not to say that there wasn’t some maniacal giggling on my part as Alan showed me around the Microsoft campus last week. Unfortunately, fangrrl behavior is a dimmer and not an on/off switch.

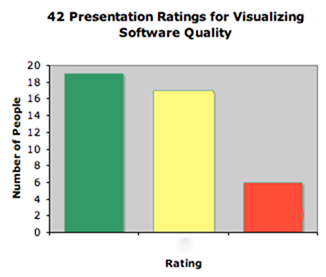

But back to the matter at hand: there is an unanswered question that was asked of my poster. Where’s the quality? This is an excellent question and is, in fact, a question I can’t answer. My inability to answer this question highlights the backstory of my conference presentation. When I submitted my abstract to PNSQC I did not have a treemap of tests or any idea if I would be successful at making one. I also didn’t know if this would be a valid way to look at tests. Titles are important. They are a succinct description for a project. In my case, I knew that I needed a good title if I had a snowball’s chance in hell for my presentation to be accepted. I decided to go big. “Visualizing Software Quality” was the biggest title I could think of for what I was (and still am) attempting. That is, visualizing all aspects of the software tester’s experience. I don’t want to explore through lists, I want to explore through visualization.

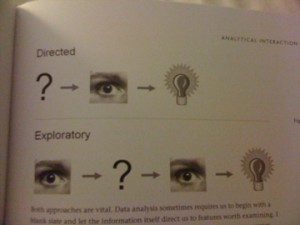

So where’s the quality? I no longer feel that this is the best question or the best title for my investigations. I’m focusing on visualizing aspects of software testing. What is the best way to get a “big picture” view of tests, defects, customer annoyance, wtf’s per minute or any other multi-variate information that testers must face? What information do we really care about and how is it best presented in an interactive and graphic way for us to explore? If we are looking at a “big picture” view of tests and defects, what are looking for and where do we want to go after we find something interesting in our data? This is entering the realm of “exploratory data analysis.” I can’t answer Mr. Bach’s question, but I’m quite flattered that he was intrigued enough to ask me. And I don’t want a football or a Red Rider BB Gun for Christmas. I think I’d much rather find John Tukey’s book, Exploratory Data Analysis under my Solstice tree.

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=edf0d971-6ef1-4ff2-bea3-307ce279b44a)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=c65ab5b3-a838-4144-b49c-2455d201ee13)